Aggregation, settlement, execution

New dynamics between these layers and how we assign value to them

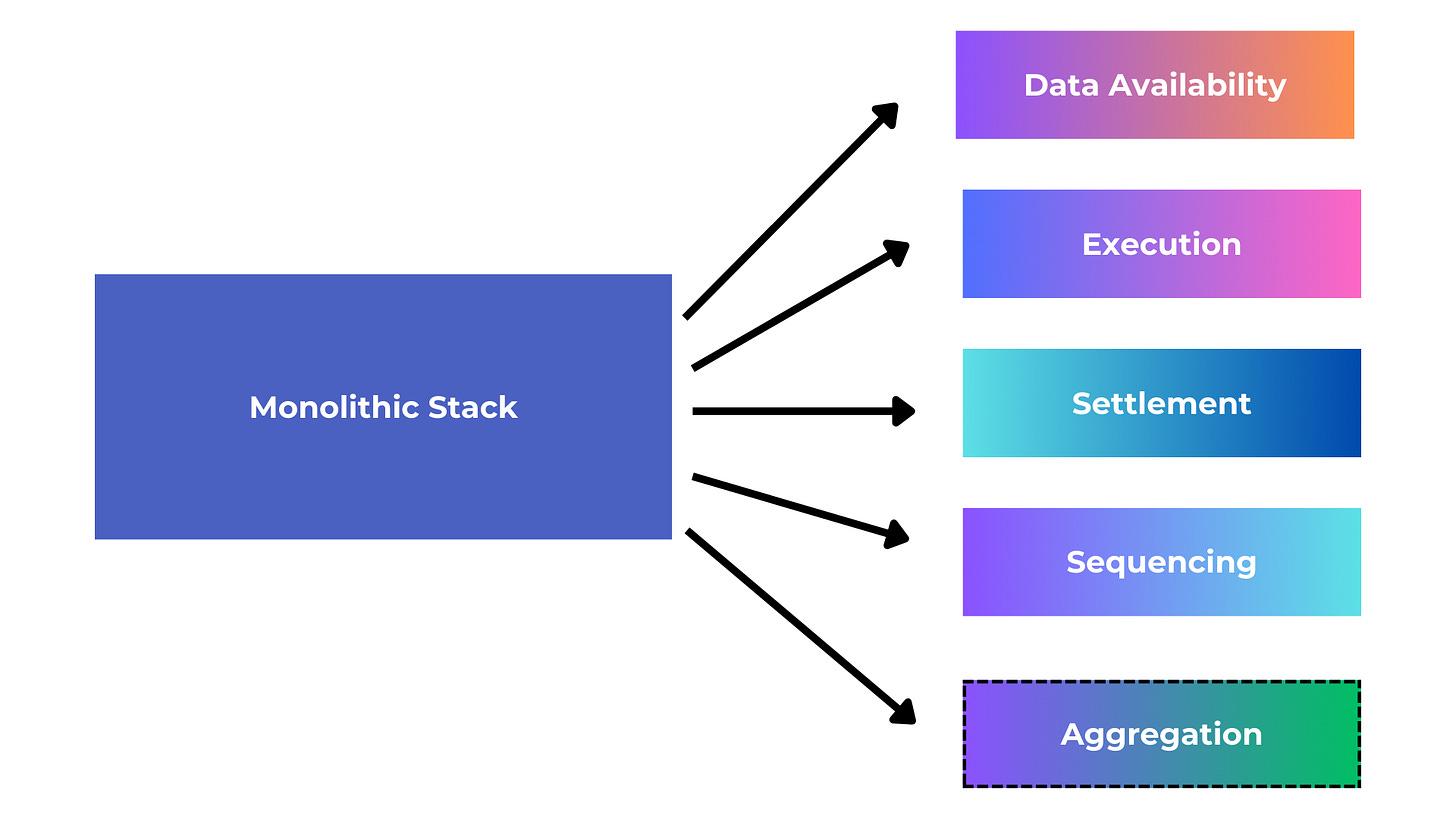

When it comes to both attention and innovation, not all components of the modular stack are created equally. While there have historically been many projects innovating at the data availability (DA) and sequencing layers, the execution and settlement layers have been comparatively more overlooked as part of the modular stack until more recently.

The shared sequencer space not only has many projects competing for market share — Espresso, Astria, Radius, Rome, and Madara to name a few — but also includes RaaS providers like Caldera and Conduit who develop shared sequencers for rollups that build on top of them. These RaaS providers are able to provide more favorable fee sharing with their rollups since their underlying business model isn’t solely dependent on the sequencing revenue. All of these products exist alongside the many rollups just opting to run their own sequencer and decentralize over time in order to capture the fees it generates.

The sequencing market is unique compared to the DA space, which basically operates like an oligopoly composed of Celestia, Avail, and EigenDA. This makes it a difficult market for smaller new entrants beyond the main three to disrupt the space successfully. Projects either leverage the “incumbent” choice — Ethereum — or opt for one of the established DA layers depending on what type of tech stack and alignment they’re looking for. While using a DA layer is a massive cost saver, outsourcing the sequencer piece isn’t as obvious of a choice (from a fee standpoint, not security) — mostly due to the opportunity cost from giving up fees generated. Many also argue that DA will become a commodity, but we’ve seen in crypto that super strong liquidity moats paired with unique (difficult to replicate) underlying tech make it much more difficult to commoditize a layer in the stack. Regardless of these debates and dynamics, there are many DA and sequencer products live in production (in short, with some of the modular stack, “there are several competitors for every single service.”)

The execution and settlement (and by extension aggregation) layers — which I believe have been comparatively underexplored — are beginning to be iterated on in new ways that align well with the rest of the modular stack.

Recap on execution + settlement layer relationship

The execution and settlement layer are tightly integrated, where the settlement layer can serve as the place where the end results of state execution are defined. The settlement layer can also add enhanced functionality to the execution layer’s results, making the execution layer more robust and secure. This in practice can mean many different capabilities — for example, the settlement layer can act as an environment for the execution layer to resolve fraud disputes, verify proofs, and bridge between other execution layers.

It’s also worth mentioning that there are teams natively enabling the development of opinionated execution environments directly within their own protocol — an example of this is Repyh Labs, which is building an L1 called Delta. This is by nature the opposite design of the modular stack, but still provides flexibility within one unified environment and comes with technical compatibility advantages since teams don’t have to spend time manually integrating each part of the modular stack. The downsides of course are being siloed from a liquidity sense, not being able to choose modular layers that best fit your design, and being too expensive.

Other teams are opting to build L1s extremely specific to one core functionality or application. One example is Hyperliquid, which has built an L1 purpose-built for their flagship native application, a perpetuals trading platform. Though their users need to bridge over from Arbitrum, their core architecture has no reliance on the Cosmos SDK or other frameworks, so it can be iteratively customized and hyperoptimized for their main use case.

Execution layer progress

The predecessor to this (last cycle, and still somewhat around) were general-purpose alt-L1s where basically the only feature that beat Ethereum was higher throughput. That meant that historically projects basically had to opt to build their own alt L1 from scratch if they wanted substantial performance improvements — mostly because the tech wasn’t there yet on Eth itself. And historically, this just meant natively embedding efficiency mechanisms directly into the general-purpose protocol. This cycle, these performance improvements are achieved through modular design and mostly are on the most dominant smart contract platform there is (Ethereum) — this way, both existing and new projects can leverage new execution layer infrastructure while not sacrificing Ethereum’s liquidity, security, and community moats.

Right now, we’re also seeing more mixing and matching of different VMs (execution environments) as part of a shared network, which allows for developer flexibility as well as better customization on the execution layer. Layer N, for example, enables developers to run generalized rollup nodes (e.g. SolanaVM, MoveVM, etc as the execution environments) and app-specific rollup nodes (e.g. perps dex, orderbook dex) on top of their shared state machine. They’re also working to enable full composability and shared liquidity between these different VM architectures, a historically difficult onchain engineering problem to do at scale. Each app on Layer N can asynchronously pass messages to one another without delay on the consensus side, which has typically been crypto’s “comms overhead” problem. Each xVM can also use different db architecture, whether it’s RocksDB, LevelDB, or a custom (a)sync db made from scratch. The interoperability piece works via a “snapshot system” (an algorithm similar to the Chandy-Lamport algorithm), where chains can asynchronously transition to a new block without requiring the system to pause. On the security side, fraud proofs can be submitted in the case that a state transition was incorrect. With this design, their goal is to minimize time to execution while maximizing overall network throughput.

MegaETH is driving progress in the execution layer space, particularly via their parallelization engine and in-memory DB where the sequencer can store the entire state in memory. On the architectural side, they leverage:

Native code compilation which enables the L2 to be much more performant (if the contract is more compute-intensive, programs can get a massive speedup, if it is not very compute-intensive, there’s still a ~2x+ speedup).

Relatively centralized block production, but decentralized block validation and verification.

Efficient state sync, where full nodes don’t need to re-execute transactions but they do need to be aware of the state delta so they can apply to their local database.

Merkle tree updating structure (where normally updating the tree is storage intensive), where their approach is a new trie data structure that’s memory and disk efficient. In memory computing allows them to squeeze the chain state inside memory so when txs are executed they don’t have to go to disk, just memory.

One more design that’s been explored and iterated on recently as part of the modular stack is proof aggregation — defined as a prover which creates a single succinct proof of multiple succinct proofs. First let’s look into aggregation layers as a whole and their historical and present trends in crypto.

Assigning value to aggregation layers

Historically, in non-crypto markets, aggregators have gained smaller market share than platforms or marketplaces:

While I’m not sure if this holds for crypto in every case, it’s definitely true of decentralized exchanges, bridges, and lending protocols.

For example, the combined market cap of 1inch and 0x (two staple dex aggregators) is ~$1bb — a small fraction of Uniswap’s ~$7.6bb. This holds for bridges as well: bridge aggregators like Li.Fi and Socket/Bungee have seemingly less market share versus platforms like Across. While Socket supports 15 different bridges, they actually have a similar total bridging volume to Across (Socket — $2.2bb, Across — $1.7bb), and Across only represents a small fraction of the volume on Socket/Bungee recently.

In the lending space, Yearn Finance was the first of its kind as a decentralized lending yield aggregator protocol — its market cap is currently ~$250mm. By comparison, platform products like Aave (~$1.4bb) and Compound (~$560mm) have commanded higher valuation and more relevance over time.

Tradfi markets operate in a similar manner. For example, ICE (Intercontinental Exchange) US and CME Group each have ~$75bb market caps, while “aggregators” like Charles Schwab and Robinhood have ~$132b and ~$15b market caps, respectively. Within Schwab, which routes through ICE and CME among many other venues, the proportional volume that routes through them is not proportional to that share of their market cap. Robinhood has roughly 119mm options contracts per month, while ICE’s are around ~35mm — and options contracts aren’t even a core part of Robinhood’s business model. Despite this, ICE is valued ~5x higher than Robinhood in the public markets. So Schwab and Robinhood, which act as application-level aggregation interfaces to route customer orderflow through various venues, do not command as high of valuations as ICE and CME despite their respective volumes.

We as consumers simply assign less value to aggregators.

This may not hold in crypto if aggregation layers are embedded into a product/platform/chain. If aggregators are tightly integrated directly into the chain, obviously that’s a different architecture and one I’m curious to see play out. An example is Polygon’s AggLayer, where devs can easily connect their L1 and L2 into a network that aggregates proofs and enables a unified liquidity layer across chains that use the CDK.

This model works similarly to Avail’s Nexus Interoperability Layer, which includes a proof aggregation and sequencer auction mechanism, making their DA product much more robust. Like Polygon’s AggLayer, each chain or rollup that integrates with Avail becomes interoperable within Avail’s existing ecosystem. In addition, Avail pools ordered transaction data from various blockchain platforms and rollups, including Ethereum, all Ethereum rollups, Cosmos chains, Avail rollups, Celestia rollups, and different hybrid constructions like Validiums, Optimiums, and Polkadot parachains, among others. Developers from any ecosystem can then permissionlessly build on top of Avail’s DA layer while using Avail Nexus, which can be used for cross-ecosystem proof aggregation and messaging.

Nebra focuses specifically on proof aggregation and settlement, where they can aggregate across different proof systems — e.g. aggregating xyz system proofs and abc system proofs in such a way where you have agg_xyzabc (vs aggregating within proof systems such that you’d have agg_xyz and agg_abc). This architecture uses UniPlonK, which standardizes the verifiers work for families of circuits, making verifying proofs across different PlonK circuits much more efficient and feasible. At its core, it uses zero knowledge proofs themselves (recursive SNARKs) to scale the verification piece — typically the bottleneck in these systems. For customers, the “last-mile” settlement is made much easier because Nebra handles all the batch aggregation and settlement, where teams just need to change an API contract call.

Astria is working on interesting designs around how their shared sequencer can work with proof aggregation as well. They leave the execution side to the rollups themselves which run execution layer software over a given namespace of a shared sequencer — essentially just the “execution API” which is a way for the rollup to accept sequencing layer data. They can also easily add support for validity proofs here to ensure a block did not violate EVM state machine rules.

Here, a product like Astria serves as the #1 → #2 flow (unordered txs → ordered block), and the execution layer / rollup node is #2 → #3, while a protocol like Nebra serves as the last mile #3 → #4 (executed block → succinct proof). Nebra (or Aligned Layer) could also be a theoretical fifth step where the proofs are aggregated and then verified after. Sovereign Labs is working on a similar concept to the last step as well, where proof aggregation based bridging is at the heart of their architecture.

In the aggregate, some application layers are beginning to own the infrastructure underneath, partly because remaining just a high level application can have incentive issues and high user adoption costs if they don’t control the stack underneath. On the flipside, as infrastructure costs are being continually driven down by competition and tech advancements, the expense for applications/appchains to integrate with modular components is becoming much more feasible. I believe this dynamic is much more powerful, at least for now.

With all of these innovations — execution layer, settlement layer, aggregation — more efficiency, easier integrations, stronger interoperability, and lower costs are made much more possible. Really what all this is leading to is better applications for the users and better developer experience for the builders. This is a winning combination that leads to more innovation — and a faster velocity of innovation — at large, and I’m looking forward to seeing what unfolds.

Huge thank you to Joey Krug, rain&coffee, Shumo Chu, Josh Bowen, Gaby Goldberg, Dima Romanov, George Lambeth, Scott Milat, Yilong Li for informing my thinking on this piece.

if I may ask,

why DA space is already oligopoly ? (does it require a lot of liquidity/security ?)

Shared sequencer and aggregation layers are hard to build they take a year to reach market. until then the existing bridge improve by 10x and will provide a single chain experience.

The User facing applications will capture more value (Execution layer, DApps & Appchains) then the infra layer.